ROS2与Interbotix系列机械臂教程-手眼标定

文章说明

- 本教程主要如何进行手眼协作中相机和臂的设置与具体操作流程

- 测试环境:Ubuntu 22.04 + ROS2 Humble + wx250s机械臂 + RealSense D435i相机

环境设置

- 首先要搭建支架,并将RealSense摄像头固定在相机支架或三脚架顶部的 1/4 英寸螺钉上

- 然后将支架放在工作区域,调整摄像头位置,使摄像头指向桌面

- 接下来,在桌面且在摄像头的视野范围内上放置一些小的、不反光的物件,并且确保机械臂能够正常抓取

- 物件应该足够小,以便无论夹具从任何方向都能正常抓取起来

- 但它们不能太反光,因为这会干扰深度摄像头通过使用红外光定位它们的能力

- 同样,工作区域不应受到阳光直射,因为这也会干扰摄像头的深度感应能力

- 工作区域示例图:

- 准备一个

apriltag标签,贴在机械臂上,如上图位置所示 apriltag标签保存存放在interbotix_ros_toolboxes/interbotix_perception_toolbox/interbotix_perception_modules/images/tags目录下,打印tag41_12_00005_printoff.pdf出来后再剪一个贴在机械臂上面

获取相机位姿

- 在测试例程前,相机必须知道臂相对于自身的位置

- 一种方法是手动测量从相机到机器人

base_link的TF位差,并将其发布为静态变换,但此方法麻烦且有可能测出不准确的数据 - 相反,使用

apriltag_ros的方法来查找夹具上的AprilTag视觉基准标记相对于相机的TF变换。之后,计算从相机TF到机械臂上的变换base_link并将其发布为静态变换 - 假设正在现实中使用WidowX-250s机械臂,获取静态变换 运行该命令

$ ros2 launch interbotix_xsarm_perception xsarm_perception.launch.py robot_model:=wx250s use_pointcloud_tuner_gui:=true use_armtag_tuner_gui:=true

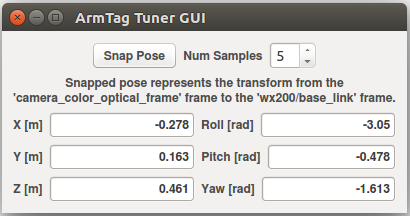

- RViz 应会与两个独立的 GUI 一起弹出,其中一个 GUI 将如下图所示

- 初始设置相机可能不能检测到AprilTag,可通过禁用扭矩方式,手动移动机械臂以便机械臂检测到AprilTag

$ ros2 service call /wx250s/torque_enable interbotix_xs_msgs/srv/TorqueEnable "{cmd_type: 'group', name: 'arm', enable: false}"

手动移动机械臂,使

AprilTag在摄像头中清晰可见(RViz 显示屏左下方的实时视频流应该会对此有所帮助)然后在与之前相同的终端中,按如下方式将机械臂放回原位

$ ros2 service call /wx250s/torque_enable interbotix_xs_msgs/srv/TorqueEnable "{cmd_type: 'group', name: 'arm', enable: true}"

- 现在,在

Armtag Tuner GUI中,单击Snap Pose按钮 - 此时可以随意设置应拍摄的快照数量,确认之后将从快照计算出的位姿取平均值,以得出机械臂相对于相机的更准确位姿

- 检查计算姿势准确性的一种方法是切换 RViz 中的

RawPointCloud显示 - 预期

AprilTag的位置应该位于机器人模型的AR标签链接上(可能在下面几毫米) - 如果不是,请继续按

Snap Pose按钮,直到看起来正确为止 - 终端信息会显示,'static_transforms.yaml' 的文件会自动保存到系统目录中

设置目标抓取框

调整xyx,设置相机画面过滤框

默认情况下,需要抓取的物体应该在框内,比如要将物件放入前方的盒子,那么过滤框中就不应出现都到该盒子

程序只会识别框中的物件

设置完成后,点击

Load Configs按钮保存配置接下来可以进行抓取测试

更多设置信息可参阅此处

其他用于进一步定制的启动文件参数,如下所示

| Argument | Description | Default | Choices |

|---|---|---|---|

| robot_model | One of: (‘px100’, ‘px150’, ‘rx150’, ‘rx200’, ‘wx200’, ‘wx250’, ‘wx250s’, ‘vx250’, ‘vx300’, ‘vx300s’, ‘mobile_px100’, ‘mobile_wx200’, ‘mobile_wx250s’) | ||

| robot_name | name of the robot (typically equal to robot_model, but could be anything) |

LaunchConfig(robot_model) |

|

| base_link_frame | name of the ‘root’ link on the arm; typically base_link, but can be changed if attaching the arm to a mobile base that already has a base_link frame. |

base_link |

|

| use_gripper | if true, the default gripper is included in the robot_description parameter; if false, it is left out; set to false if not using the default gripper. |

true |

true, false |

| show_ar_tag | if true, the AR tag mount is included in the robot_description parameter; if false, it is left out; set to true if using the AR tag mount in your project. |

true |

true, false |

| show_gripper_bar | if true, the gripper_bar link is included in the robot_description parameter; if false, the gripper_bar and finger links are not loaded. Set to false if you have a custom gripper attachment. |

true |

true, false |

| show_gripper_fingers | if true, the gripper fingers are included in the robot_description parameter; if false, the gripper finger links are not loaded. Set to false if you have custom gripper fingers. |

true |

true, false |

| use_world_frame | set this to true if you would like to load a ‘world’ frame to the robot_description parameter which is located exactly at the ‘base_link’ frame of the robot; if using multiple robots or if you would like to attach the ‘base_link’ frame of the robot to a different frame, set this to false. |

false |

true, false |

| external_urdf_loc | the file path to the custom urdf.xacro file that you would like to include in the Interbotix robot’s urdf.xacro file. | ‘’ | |

| hardware_type | configures the robot_description parameter to use the actual hardware, fake hardware, or hardware simulated in Gazebo. |

actual |

actual, fake, gz_classic |

| robot_description | URDF of the robot; this is typically generated by the xacro command. | Command(FindExec(xacro) + ‘ ‘ + LocalVar(‘FindPackageShare(pkg= interbotix_xsarm_descriptions) + ‘urdf’ + LaunchConfig(robot_model)’) + ‘.urdf.xacro ‘ + ‘robot_name:=’ + LaunchConfig(robot_name) + ‘ ‘ + ‘base_link_frame:=’ + LaunchConfig(base_link_frame) + ‘ ‘ + ‘use_gripper:=’ + LaunchConfig(use_gripper) + ‘ ‘ + ‘show_ar_tag:=’ + LaunchConfig(show_ar_tag) + ‘ ‘ + ‘show_gripper_bar:=’ + LaunchConfig(show_gripper_bar) + ‘ ‘ + ‘show_gripper_fingers:=’ + LaunchConfig(show_gripper_fingers) + ‘ ‘ + ‘use_world_frame:=’ + LaunchConfig(use_world_frame) + ‘ ‘ + ‘external_urdf_loc:=’ + LaunchConfig(external_urdf_loc) + ‘ ‘ + ‘hardware_type:=’ + LaunchConfig(hardware_type) + ‘ ‘) |

|

| load_configs | a boolean that specifies whether or not the initial register values (under the ‘motors’ heading) in a Motor Config file should be written to the motors; as the values being written are stored in each motor’s EEPROM (which means the values are retained even after a power cycle), this can be set to false after the first time using the robot. Setting to false also shortens the node startup time by a few seconds and preserves the life of the EEPROM | true |

true, false |

| rs_camera_pointcloud_enable | enables the RealSense camera’s pointcloud. | true |

true, false |

| rbg_camera_profile | profile for the rbg camera image stream, in <width>x<height>x<fps> |

640x480x30 |

|

| depth_module_profile | profile for the depth module stream, in <width>x<height>x<fps> |

640x480x30 |

|

| rs_camera_logging_level | set the logging level for the realsense2_camera launch include | info |

debug, info, warn, error, fatal |

| rs_camera_output_location | set the logging location for the realsense2_camera launch include | screen |

screen, log |

| rs_camera_initial_reset | On occasions the RealSense camera is not closed properly and due to firmware issues needs to reset. If set to true, the device will reset prior to usage. |

false |

true, false |

| filter_ns | namespace where the pointcloud related nodes and parameters are located | pc_filter |

|

| filter_params | file location of the parameters used to tune the perception pipeline filters | LocalVar(‘FindPackageShare(pkg= interbotix_xsarm_perception) + ‘config’ + ‘filter_params.yaml’’) |

|

| use_pointcloud_tuner_gui | whether to show a GUI that a user can use to tune filter parameters | false |

true, false |

| enable_pipeline | whether to enable the perception pipeline filters to run continuously; to save computer processing power, this should be set to false unless you are actively trying to tune the filter parameters; if false, the pipeline will only run if the get_cluster_positions ROS service is called. |

LaunchConfig(use_pointcloud_tuner_gui) |

true, false |

| cloud_topic | the absolute ROS topic name to subscribe to raw pointcloud data | ‘/camera/depth/color/points’ | |

| tags_config | parameter file location for the AprilTag configuration | LocalVar(‘FindPackageShare(pkg= interbotix_perception_modules) + ‘config’ + ‘tags.yaml’’) |

|

| camera_frame | the camera frame in which the AprilTag will be detected | camera_color_optical_frame |

|

| apriltag_ns | namespace where the AprilTag related nodes and parameters are located | apriltag |

|

| camera_color_topic | the absolute ROS topic name to subscribe to color images | ‘camera/color/image_raw’ | |

| camera_info_topic | the absolute ROS topic name to subscribe to the camera color info | ‘camera/color/camera_info’ | |

| armtag_ns | name-space where the Armtag related nodes and parameters are located | armtag |

|

| ref_frame | the reference frame that the armtag node should use when publishing a static transform for where the arm is relative to the camera | LaunchConfig(camera_frame) |

|

| arm_base_frame | the child frame that the armtag node should use when publishing a static transform for where the arm is relative to the camera | LaunchConfig(robot_name) + ‘/’ + LaunchConfig(base_link_frame) |

|

| arm_tag_frame | name of the frame on the arm where the AprilTag is located (typically defined in the URDF) | LaunchConfig(robot_name) + ‘/’ + ‘ar_tag_link’ |

|

| use_armtag_tuner_gui | whether to show a GUI that a user can use to publish the ref_frame to arm_base_frame transform |

false |

true, false |

| position_only | whether only the position component of the detected AprilTag pose should be used when calculating the ref_frame to arm_base_frame transform; this should only be set to true if a TF chain already exists connecting the camera and arm base_link frame, and you just want to use the AprilTag to refine the pose further |

false |

true, false |

| load_transforms | whether or not the static_trans_pub node should publish any poses stored in the static_transforms.yaml file at startup; this should only be set to false if a TF chain already exists connecting the camera and arm base_link frame (typically defined in a URDF), and you would rather use that TF chain as opposed to the one specified in the static_transforms.yaml file |

true |

true, false |

| transform_filepath | filepath to the static_transforms.yaml file used by the static_trans_pub node; if the file does not exist yet, this is where you wouldd like the file to be generated | LocalVar(‘FindPackageShare(pkg= interbotix_xsarm_perception) + ‘config’ + ‘static_transforms.yaml’’) |

|

| use_rviz | launches RViz if set to true |

true |

true, false |

| rviz_frame | desired fixed frame in RViz |

LaunchConfig(robot_name) + ‘/’ + LaunchConfig(base_link_frame) |

|

| rvizconfig | filepath to the RViz config file | LocalVar(‘FindPackageShare(pkg= interbotix_xsarm_perception) + ‘rviz’ + ‘xsarm_perception.rviz’’) |

演示视频

获取最新文章: 扫一扫右上角的二维码加入“创客智造”公众号